Curated Analysis Task Results

Results of curated problems for Analysis task with multiple choice and open-ended format.

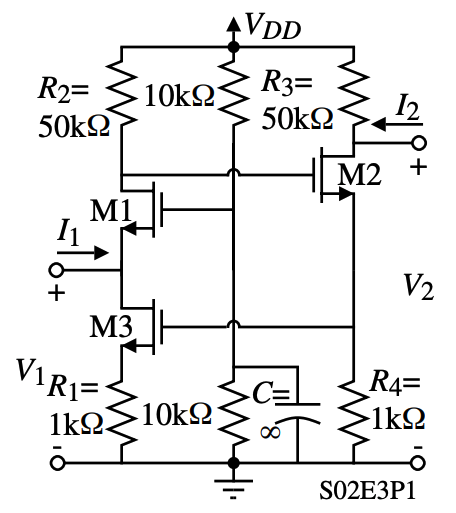

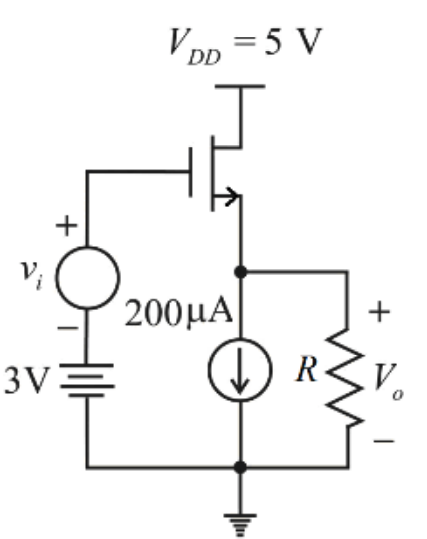

| Model | Level 0 (Resistor) |

Level 1 (RLC) |

Level 2 (Small Signal) |

Level 3 (Transistor) |

Level 4 (Block) |

Overall Accuracy |

|---|---|---|---|---|---|---|

| Multiple Choice Format (%) | ||||||

| GPT-4o | 39.80 | 49.58 | 32.88 | 48.80 | 39.58 | 45.07 |

| Claude-Sonnet-4 | 66.72 | 71.22 | 61.64 | 72.01 | 66.67 | 69.67 |

| Gemini-2.5-Pro | 74.04 | 87.39 | 78.08 | 81.72 | 89.58 | 80.71 |

| InternVL3-78B | 23.16 | 20.59 | 13.70 | 13.11 | 14.58 | 18.06 |

| Qwen2.5-VL-72b-instruct | 29.53 | 41.60 | 30.14 | 35.94 | 29.17 | 34.90 |

| GLM-4.5V | 24.63 | 29.20 | 9.59 | 17.28 | 31.25 | 22.42 |

| Open-ended format (%) | ||||||

| GPT-4o | 29.59 | 29.83 | 19.18 | 13.96 | 17.81 | 22.84 |

| Claude-Sonnet-4 | 35.56 | 50.21 | 12.33 | 27.04 | 33.33 | 34.76 |

| Gemini-2.5-Pro | 76.98 | 84.87 | 73.97 | 55.85 | 72.92 | 70.32 |

| InternVL3-78B | 20.79 | 19.54 | 6.85 | 14.47 | 10.42 | 17.26 |

| Qwen2.5-VL-72B-Instruct | 28.73 | 31.30 | 16.44 | 13.71 | 22.92 | 22.85 |

| GLM-4.5V | 34.44 | 39.71 | 13.70 | 19.50 | 25.00 | 28.83 |